Implications of artificial intelligence (AI) have been impacting different aspects of society, and universities, such as Whitworth, are not exempt. Whitworth faculty members feel like there isn’t enough conversation about AI on campus.

To address this, professors from the Computer Science (CS) department have published a series of articles to increase conversation about the impact of AI on campus. “We think there should be more conversation about it,” said Pete Tucker, computer science professor, one of the faculty members who had the idea to start this blog. “People should be talking about AI,” he said.

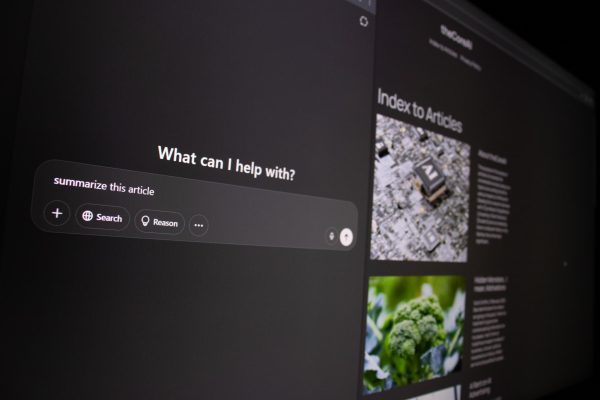

Their website, theCoreAI, currently features six articles written by various faculty members, including Tucker and Matthew Bell, a fellow computer science professor. “There seems to be kind of a culture of ignoring the problem [of AI] and hoping that it will go away,” said Bell. “And the matter of fact is, it is a problem,” he said.

These articles discuss how AI is used both positively and negatively across many areas of society, as well as provide insights into how people can address these issues by conversing about them. One of the main ways that AI is currently being discussed on campus is about how students from various disciplines use it for assignments, according to Tucker.

“I’m virtually certain that my students are using generative AI all over the place,” said Bell.

By starting the conversation about AI on campus, the faculty hopes to spark discussion and discourse on the use of AI in their discipline.

“Students are going to use AI, and they need to,” said Bell. “Not simply so they can make a product… they are going to need to use it so that they might be leaders in its future innovation, so they might redirect its evolution in life,” he added.

Although AI has the capability to do many things, it still tends to make a lot of mistakes.

On the website, the description of theCoreAI articles was generated by Copilot AI. There are two descriptions generated. However, there is a notable mistake within the most recent summary, generated on Feb. 11.

“It actually made up an article that we hadn’t even written yet,” said Tucker.

The summary lists seven articles, which is one more than is currently published. The disclaimer also said that it took three tries for the summary to get a detailed summary. “We thought it was so bad we had to put it on the front page,” said Tucker.

AI also can’t understand values specific to an organization or community. One of Tucker’s articles is about the role of AI in jobs and how AI has its benefits, but it lacks many human skills. The article states that “Generative AI cannot understand the mission and values of the organization to generate results that align.” This statement is supported by an assignment that Tucker gave to his students.

His students were working on a project for a local organization. When AI was asked to generate a piece of the project, it was generalized because it didn’t have the local knowledge the students had.

“I think the thing that I learned as I put that [article] together was just the different ways AI is useful, but also the different ways where AI falls really short,” said Tucker.

AI influences every aspect and discipline on campus, and the faculty hopes to open the discussion to anyone who wants to join.

“Anybody who has an idea or thoughts on AI, we want to encourage those articles as well,” said Tucker. “As long as we keep thinking of ideas, and as long as people… hopefully have these discussions, we’ll keep pushing on it,” he said.